Portrait Weekly Framing: Marvell's $94B Data Center Vision

Welcome to this week's edition of the Portrait Weekly Framing. Today, we'll be researching the implications of Marvell Technology's dramatic upward revision of its data center total addressable market (TAM) forecast.

At its recent Custom AI Investor Event, Marvell Technology delivered a notable upward revision to its data center total addressable market (TAM) forecast, projecting the market to reach $94 billion by calendar year 2028. This represents a substantial increase from the company's previous estimates and reflects management's assessment of accelerating demand for AI infrastructure and data center solutions.

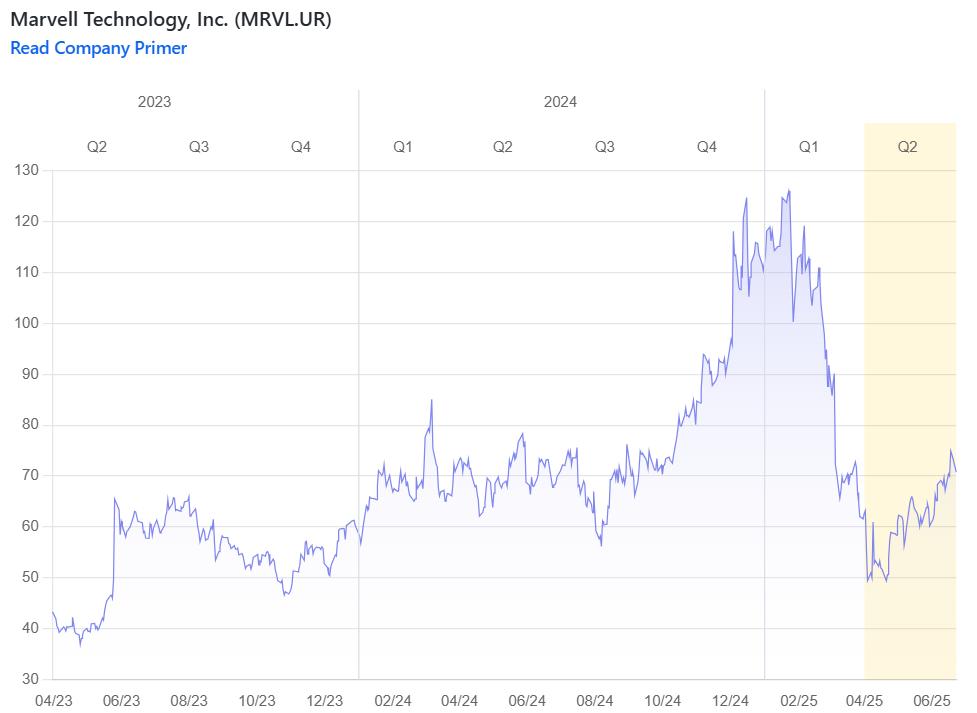

The market responded positively to Marvell's updated outlook, with shares rising ~4.3% following the announcement. The company's revised TAM suggests that the AI-driven transformation of data center infrastructure may be occurring at a faster pace and larger scale than previously anticipated. This development extends beyond Marvell's individual prospects, as it signals a potentially broader reassessment of the opportunity across companies serving the data center and AI infrastructure markets.

To better understand the significance of Marvell's announcements and their broader market implications, I decided to use Portrait's Research tool to analyze the key takeaways from the investor event. I ran the following research query:

Analyze Marvell Technology's recent custom AI investor event, focusing on the company's revised data center TAM forecast to $94B for CY28. What were the key drivers behind this upward revision, and what does this suggest about the broader AI infrastructure market opportunity? Additionally, evaluate how this positions Marvell relative to its competitive landscape and what this means for the company's growth prospects.

The analysis revealed several key insights about both Marvell's positioning and the broader AI infrastructure market dynamics. The TAM revision was driven by accelerating growth in custom compute markets, with the custom XPU segment representing $40 billion growing at a 47% CAGR, and an emerging XPU attach market of $15 billion growing at a remarkable 90% CAGR. This growth is underpinned by dramatic increases in hyperscaler capital expenditure, with the top 4 U.S. hyperscalers' CapEx growing from $150 billion in 2023 to over $200 billion in 2024, with projections exceeding $300 billion in 2025.

Executive Summary

Marvell Technology's custom AI investor event on June 17, 2025, revealed a significant upward revision to the company's data center Total Addressable Market (TAM) forecast, increasing from $75 billion to $94 billion for calendar year 2028. This 26% increase reflects accelerating growth in custom AI infrastructure, with the revised TAM now projecting a 35% compound annual growth rate (CAGR) compared to the previously estimated 30% CAGR. The revision positions Marvell at the center of a rapidly expanding AI infrastructure opportunity while highlighting the company's strengthened competitive position in custom silicon solutions.

Revised TAM Forecast and Key Drivers

TAM Revision Details

Marvell's data center TAM revision represents a substantial increase in the addressable market opportunity. The company previously projected a $75 billion TAM growing at almost 30% CAGR, but now forecasts $94 billion in 2028 with a 35% CAGR (Marvell Technology, Inc. - Shareholder/Analyst Call). The revision reflects faster-than-expected growth across multiple segments, with compute showing almost 30% larger growth than projected last year and interconnect up about 37% (Marvell Technology, Inc. - Shareholder/Analyst Call).

Custom Compute Market Expansion

The most significant driver behind the TAM revision is the explosive growth in custom compute markets, which Marvell breaks down into two primary categories:

XPU Market: The custom XPU segment represents $40 billion of the total TAM, growing at a 47% CAGR. These are the largest and most complex chips where the technology required to compete is accelerating (Marvell Technology, Inc. - Shareholder/Analyst Call).

XPU Attach Market: This emerging category represents $15 billion with a remarkable 90% CAGR. XPU attach includes custom silicon independent of interconnect, switching, and storage, encompassing network interface controllers (NICs), power management ICs, scale-up fabric, specialized coprocessors, and memory/storage poolers and expanders. As noted in the event, "When you look out to 2028, the custom XPU attach market is of the same magnitude of the entire custom silicon market today for the cloud" (Marvell Technology, Inc. - Shareholder/Analyst Call).

Hyperscaler CapEx Growth and Market Expansion

The TAM revision is underpinned by dramatic increases in hyperscaler capital expenditure commitments. The top 4 U.S. hyperscalers' CapEx has grown from $150 billion in 2023 to over $200 billion in 2024, with projections exceeding $300 billion in 2025 (Marvell Technology, Inc. - Shareholder/Analyst Call). Beyond the established hyperscalers, a new wave of companies is investing in AI data infrastructure, including emerging hyperscalers building foundational models and companies developing specialized AI applications.

The market expansion extends beyond traditional players, with the rise of sovereign AI initiatives as nations invest in building local AI infrastructure. Analysts are forecasting total data center CapEx exceeding $1 trillion in 2028, with total data center CapEx growing faster than the top 4 hyperscalers (51% CAGR versus 46% CAGR) (Marvell Technology, Inc. - Shareholder/Analyst Call).

Broader AI Infrastructure Market Implications

Workload Diversification Driving Specialization

The TAM revision reflects fundamental changes in AI infrastructure requirements driven by workload diversification. As workloads have diversified significantly across pre-training, post-training, and inference applications, specialization has become increasingly important for superior total cost of ownership and performance. This trend supports the thesis that "if you're in the business of building an AI factory, one size does not fit all" (Marvell Technology, Inc. - Shareholder/Analyst Call).

Platform Customization Beyond Silicon

The customization trend extends beyond individual chips to entire platforms, as general-purpose platforms originally designed for racks of servers have become bottlenecks for AI infrastructure. The largest hyperscalers have built full custom platforms to house their custom XPUs, while new approaches are emerging including third-party accelerated infrastructure optimized platforms and standards-based accelerated infrastructure platforms (Marvell Technology, Inc. - Shareholder/Analyst Call).

Marvell's Competitive Positioning

Market Share and Customer Traction

Marvell's competitive position has strengthened significantly since the previous year's AI event. The company now has 18 different sockets in the custom compute market, including 12 sockets adopted by the top 4 U.S. hyperscalers (3 custom XPU sockets and 9 custom XPU attach sockets) and 6 sockets adopted by emerging hyperscalers (2 custom XPU sockets and 4 custom XPU attach sockets) (Marvell Technology, Inc. - Shareholder/Analyst Call).

In 2023, Marvell held less than 5% share of the $55 billion custom compute TAM, but the company is now targeting 20% share by 2028. This ambitious target reflects confidence in the company's ability to capture a meaningful portion of both the $40 billion XPU market and the $15 billion XPU attach market (Marvell Technology, Inc. - Shareholder/Analyst Call).

Technology Leadership and Differentiation

Marvell's competitive advantage stems from its position as a unique end-to-end full-service custom silicon provider. The company brings together system architecture, design IP, silicon services, packaging expertise, and full manufacturing/logistics support, eliminating the need for customers to "cobble together IP from third parties or hire design houses" (Marvell Technology, Inc. - Shareholder/Analyst Call).

The company's technology leadership is evidenced by its proven track record on leading-edge process nodes, with volume production in 5-nanometer and 3-nanometer, test chips on 2-nanometer, and development on A16 and A14 nodes. Marvell has also demonstrated breakthrough capabilities including the world's first 448 gig SerDes and industry-leading custom SRAM solutions that deliver 17x the bandwidth per square millimeter while consuming 66% less power than standard solutions (Marvell Technology, Inc. - Shareholder/Analyst Call).

Pipeline and Revenue Potential

Marvell's opportunity pipeline has expanded substantially, with more than 50 additional opportunities beyond the 18 current sockets, servicing over 10 different customers with projected lifetime revenue of $75 billion. The pipeline breakdown shows approximately one-third are custom XPU opportunities (worth multiple billions of dollars over 18-24 month lifespans) and two-thirds are custom XPU attach opportunities (worth several hundred million dollars over 2-4 year lifespans) (Marvell Technology, Inc. - Shareholder/Analyst Call).

Growth Prospects and Financial Trajectory

Revenue Growth and Business Mix Evolution

Marvell's data center business has demonstrated exceptional growth momentum, with revenue increasing from $816.4 million in Q1 FY2025 to $1,440.6 million in Q1 FY2026, representing 76% year-over-year growth (MRVL.UR 8-K 05/29/25 Earnings Release). The data center segment has evolved from 70% of total revenue in Q1 FY2025 to 76% in Q1 FY2026, indicating continued business mix transformation toward AI infrastructure (MRVL.UR 8-K 05/29/25 Earnings Release).

Custom Silicon Revenue Acceleration

In Q4 fiscal 2025, approximately 25% of data center revenue was custom silicon, with the company expecting custom to grow to greater than 50% of cloud AI revenue over time (Marvell Technology, Inc. - Shareholder/Analyst Call). The company expects a larger step-up in calendar 2027 as several new programs, including a major XPU socket, hit mass production, positioning Marvell to achieve its 20% target by 2028 on a market that has grown to almost $95 billion (Marvell Technology, Inc. - Shareholder/Analyst Call).

Strategic Portfolio Management

The sale of Marvell's Automotive Ethernet business to Infineon for $2.5 billion demonstrates strategic focus on higher-growth AI infrastructure opportunities (Infineon Technologies AG, Marvell Technology, Inc. - M&A Call (Presentation)). This transaction, representing approximately 10-11x revenue multiple for a business with $225-250 million in annual revenue and 60% gross margins, provides additional capital flexibility for investments in custom chip and co-packaged optics development (News - April 2025 - MRVL.UR).

Investment Implications and Market Reception

Analyst Response and Market Validation

The custom AI investor event generated broadly positive analyst responses, with multiple firms raising price targets and expressing optimism about Marvell's positioning in the AI infrastructure space. Analysts highlighted the company's announced pipeline as indicative of its ability to continue capitalizing on market opportunities and noted the growth in the company's customer base to over 10 customers (News - June 2025 - MRVL.UR).

Specific analyst reactions included B Riley Securities raising their target to $115 from $110 while maintaining a buy rating, citing the company's well-positioned status in the growing data center market and its large product pipeline projected to be worth $75 billion (News - June 2025 - MRVL.UR).

Technology Innovation and Competitive Moats

Marvell's recent technological achievements, including the development of 2nm custom SRAM for next-generation AI infrastructure silicon, demonstrate continued innovation leadership. The custom SRAM delivers up to 6 gigabits of high-speed memory while reducing memory power consumption and die area, enabling chip designers to recover up to 15% of the total area of a 2nm design (News - June 2025 - MRVL.UR).

Conclusion

Marvell's revised $94 billion data center TAM forecast for CY28 reflects the company's strengthened position in a rapidly expanding AI infrastructure market. The 26% upward revision is driven by accelerating custom compute demand, hyperscaler CapEx growth, and market expansion beyond traditional cloud providers. With 18 current design wins, a pipeline of over 50 opportunities worth $75 billion in lifetime revenue, and a target of achieving 20% market share by 2028, Marvell appears well-positioned to capitalize on the AI infrastructure buildout. The company's unique end-to-end capabilities, technology leadership in advanced process nodes, and strategic focus following the automotive Ethernet divestiture support confidence in its ability to execute on this substantial market opportunity.

Given the magnitude of Marvell's TAM revision and the underlying drivers of hyperscaler CapEx growth and AI infrastructure expansion, I wanted to identify other companies positioned to benefit from this expanding market opportunity. The research highlighted that the growth extends beyond traditional semiconductor companies to encompass the broader AI infrastructure supply chain, including companies providing specialized components, manufacturing services, and supporting technologies for data center buildouts. To capture this broader opportunity set, I ran the following Screening query:

Find me companies that: manufacture semiconductors for data centers or AI applications, provide semiconductor manufacturing services or equipment, supply components for AI infrastructure including memory, storage, or networking equipment, or offer specialized services supporting data center construction and operations.

The screening results revealed 65 companies spanning the AI infrastructure ecosystem, from obvious leaders like NVIDIA and TSMC to more specialized players across the value chain. What's particularly striking is the breadth of companies positioned to benefit from this expansion—ranging from traditional semiconductor manufacturers to contract manufacturers, test equipment providers, and even construction services companies building the physical infrastructure.

Among the most compelling opportunities identified through the screening was Rambus Inc., a company that exemplifies how Marvell's TAM revision may be understating the broader opportunity. Rambus has quietly transformed into a pure-play AI infrastructure beneficiary, with over 75% of its chip and silicon IP revenue now derived from data center and AI applications. The company delivered record Q1 2025 product revenue of $76.3 million (up 52% year-over-year) while maintaining >40% market share in DDR5 Registered Clock Drivers—critical components that address the memory bandwidth bottleneck that becomes more acute as AI workloads scale. What makes Rambus particularly interesting is its positioning for the next wave of AI deployment: inference applications that will require massive amounts of standard memory infrastructure rather than specialized HBM solutions. With a debt-free balance sheet, $500+ million in cash, and multiple product expansion vectors including power management chips and MRDIMM chipsets, Rambus represents the type of specialized, high-margin infrastructure play that could see dramatic re-rating as investors recognize the breadth of the AI opportunity beyond the headline names. The below Research output further dives into the potential investment case for Rambus:

Rambus Inc. (RMBS.UR) Investment Analysis

Executive Summary

Rambus Inc. represents a compelling investment opportunity within the AI and data center semiconductor ecosystem, demonstrating exceptional financial performance while maintaining strategic positioning in critical memory interface technologies. The company has achieved record financial results with strong exposure to AI infrastructure buildout, supported by market-leading positions in essential components and a diversified business model spanning product sales, IP licensing, and patent royalties.

Financial Performance and Operational Excellence

Outstanding Q1 2025 Results

Rambus delivered exceptional Q1 2025 financial performance that significantly exceeded expectations across key metrics. Total revenue reached $166.7 million, representing a robust 41% year-over-year increase from $117.9 million in Q1 2024 (RMBS 8-K 04/28/25 Earnings Release). The company achieved record quarterly product revenue of $76.3 million from Memory Interface Chips, marking a substantial 52% year-over-year growth (RMBS 8-K 04/28/25 Earnings Release).

This strong top-line performance translated into impressive operating leverage, with non-GAAP operating income expanding to $76.3 million compared to $43.7 million in the prior year period, representing 75% year-over-year growth (Rambus Inc., Q1 2025 Earnings Call, Apr 28, 2025 (Presentation)). Operating margin expanded to 38% from 26% in the prior year quarter, demonstrating the company's ability to scale efficiently (RMBS 8-K 04/28/25 Earnings Release).

Exceptional Cash Generation and Balance Sheet Strength

The company demonstrated outstanding cash generation capabilities with $77.4 million in cash from operations during Q1 2025, compared to $39.1 million in the prior year quarter, representing 98% year-over-year growth (Rambus Inc., Q1 2025 Earnings Call, Apr 28, 2025 (Presentation)). Rambus maintains a fortress balance sheet with $514.4 million in total cash and marketable securities as of Q1 2025, up from $391.1 million a year ago (Rambus Inc., Q1 2025 Earnings Call, Apr 28, 2025 (Presentation)). The company operates debt-free with stockholders' equity of $1.16 billion, providing substantial financial flexibility for strategic initiatives and growth investments (RMBS 10-Q Q1 2025).

Long-Term Growth Trajectory

Beyond quarterly results, Rambus has demonstrated sustained growth momentum with record 2024 product revenue of $247 million, representing the culmination of a robust 28% five-year compound annual growth rate (CAGR) in product revenue (Rambus Inc. Presents at Baird Global Consumer, Technology & Services Conference 2025, Jun-03-2025 09:40 AM (Presentation)). The company generated $231 million in cash from operations in 2024, evidencing strong and consistent cash generation capabilities (Rambus Inc. Presents at Baird Global Consumer, Technology & Services Conference 2025, Jun-03-2025 09:40 AM (Presentation)).

Strategic Positioning in AI and Data Center Markets

Direct AI Infrastructure Exposure

Rambus is strategically positioned to capitalize on the AI-driven data center boom through its specialized semiconductor solutions, with over 75% of its chip and silicon IP revenue derived from data center and AI applications (Rambus Inc. Presents at Baird Global Consumer, Technology & Services Conference 2025, Jun-03-2025 09:40 AM (Presentation)). The company describes itself as having "amplified opportunity in data center fueled by AI with expanding product portfolio and sustained technical leadership" (Rambus Inc., Q1 2025 Earnings Call, Apr 28, 2025 (Presentation)).

Management highlighted strong demand drivers, noting "We do see some very nice tailwinds for the server market, both in the traditional server market and the AI servers that drives demand for more bandwidth and capacity in those servers, which, as a consequence, drive demand for our products" (Rambus Inc. Presents at Bank of America Global Technology Conference 2025, Jun-05-2025 08:40 AM).

Market Leadership in Critical Components

The company maintains sustained market leadership in core DDR5 Registered Clock Drivers (RCDs), which are essential components for high-performance memory systems in data centers and AI applications. Rambus has achieved DDR5 generation market share of "a little north of 40% last year," compared to approximately 25% market share in the DDR4 generation (Rambus Inc. Presents at Bank of America Global Technology Conference 2025, Jun-05-2025 08:40 AM). The company targets 40% to 50% market share as its strategic goal (Rambus Inc. Presents at Bank of America Global Technology Conference 2025, Jun-05-2025 08:40 AM).

The addressable market for RCD chips is approximately $750 million, with companion chips adding an additional $600 million serviceable addressable market (SAM) to create a combined $1.35 billion opportunity (Rambus Inc. Presents at Bank of America Global Technology Conference 2025, Jun-05-2025 08:40 AM). High-performance client systems are expected to require similar chips, adding "a couple of million dollars more of SAM" to the total addressable market (Rambus Inc. Presents at Bank of America Global Technology Conference 2025, Jun-05-2025 08:40 AM).

Technology Innovation and Development Pipeline

Rambus continues to advance its product portfolio with new solutions specifically targeting AI and data center applications. The company reported continued progress in new products, including Power Management Integrated Circuits (PMICs) and MRDIMM 12800 chipset, with qualifications currently ongoing (RMBS 8-K 04/28/25 Earnings Release). These developments represent expansion into adjacent high-growth markets within the AI infrastructure ecosystem.

The cadence of product development has accelerated significantly due to AI demands. Management noted that "In the DDR4 generation of product a few years ago, we had to develop an RCD chip every other year. Now today, in the DDR5 generation of products, we have to develop a new chip every year" (Rambus Inc. Presents at Bank of America Global Technology Conference 2025, Jun-05-2025 08:40 AM). This acceleration reflects the rapid pace of AI infrastructure evolution and Rambus's ability to keep pace with customer requirements.

Diversified Business Model and Competitive Advantages

Revenue Diversification

Rambus operates a diversified business model combining product revenue from Memory Interface Chips with licensing revenue from its extensive patent portfolio. In Q1 2025, product revenue represented 46% of total revenue, royalties comprised 44%, and contract and other revenue accounted for 10% (RMBS 10-Q Q1 2025). This diversification provides both growth exposure to AI infrastructure buildout through product sales and stable, recurring income through licensing agreements.

The patent licensing business generates approximately $210 million annually with 100% margins and is "completely immune" to tariff concerns (Rambus Inc. Presents at Bank of America Global Technology Conference 2025, Jun-05-2025 08:40 AM). The silicon IP business, generating approximately $120 million annually with 10% to 15% growth rates, has been "actually driven by the demand for HBM over the last couple of years" (Rambus Inc. Presents at Bank of America Global Technology Conference 2025, Jun-05-2025 08:40 AM).

Innovation-Driven Competitive Moat

The company's continued innovation feeds both its patent portfolio and product roadmap, creating sustainable competitive advantages. As of March 31, 2025, Rambus's semiconductor, security and other technologies are covered by 2,244 U.S. and foreign patents, with an additional 525 patent applications pending in various countries (RMBS 10-Q Q1 2025). The total patent portfolio encompasses approximately 2,700 patents and patents pending (Rambus Inc. Presents at Baird Global Consumer, Technology & Services Conference 2025, Jun-03-2025 09:40 AM (Presentation)).

Rambus's technical leadership in memory interface technologies and silicon IP represents a significant moat in serving the performance-critical requirements of AI and advanced computing applications. The company has established 35 years of technology leadership in the semiconductor industry, supported by an engineering-focused organization with over 70% of its approximately 725 employees in engineering roles (Rambus Inc. Presents at Baird Global Consumer, Technology & Services Conference 2025, Jun-03-2025 09:40 AM (Presentation)).

Market Dynamics and Growth Drivers

AI-Driven Memory Requirements

The fundamental driver of Rambus's growth opportunity stems from the increasing memory bandwidth and capacity requirements of AI applications. Management explained that "Whether it's an AI server or a traditional server, there is a very high demand for more bandwidth and more capacity" because "Server technology moves faster than memory technology" and "More and more cores on every CPU, every core needs its dedicated memory" (Rambus Inc. Presents at Bank of America Global Technology Conference 2025, Jun-05-2025 08:40 AM).

The number of memory channels per CPU is expanding significantly, from approximately 8 memory channels per CPU in the DDR4 generation to a mix of 8 and 12 channels in DDR5, converging to 12 channels, with expectations that "at the end of the DDR5 generation of products, people will probably converge to 16 channels" (Rambus Inc. Presents at Bank of America Global Technology Conference 2025, Jun-05-2025 08:40 AM). This channel expansion directly drives demand for Rambus's memory interface solutions.

AI Inference Opportunity

Beyond AI training applications, Rambus is positioned to benefit from the growing AI inference market. Management noted that "Typically, inference systems are simpler than training systems. A lot of things that are currently being used on GPUs and HBM can actually be run on more standard processors on the inference side. So that will drive demand for us" (Rambus Inc. Presents at Bank of America Global Technology Conference 2025, Jun-05-2025 08:40 AM). This represents an additional growth vector as AI deployment shifts toward inference applications.

Product Portfolio Expansion

Rambus is expanding beyond its core RCD business into companion chips and adjacent markets. The company introduced power management chips in April 2024, with platforms using these chips expected to start ramping in the second half of 2025, with "the bulk of that growth in 2026" (Rambus Inc. Presents at Bank of America Global Technology Conference 2025, Jun-05-2025 08:40 AM). The objective for companion chips is "to reach about 20% share at this point in time" (Rambus Inc. Presents at Bank of America Global Technology Conference 2025, Jun-05-2025 08:40 AM).

The MRDIMM chipset represents another significant opportunity, enabling customers to "double the amount of memory and you multiplex the access of the memory on to the memory bus" with "exactly the same infrastructure, the same CPU architecture" (Rambus Inc. Presents at Bank of America Global Technology Conference 2025, Jun-05-2025 08:40 AM). This product is linked to a platform launch expected in 2026, with initial ramp anticipated in the second half of 2026 (Rambus Inc. Presents at Bank of America Global Technology Conference 2025, Jun-05-2025 08:40 AM).

Risk Factors and Considerations

Market Concentration and Cyclicality

Rambus's heavy concentration in data center and AI markets creates both opportunity and risk. While this positioning enables the company to benefit from AI infrastructure investment, it also creates cyclical exposure to infrastructure spending patterns. The top 5 customers represented approximately 71% of consolidated revenue for Q1 2025, indicating significant customer concentration risk (RMBS 10-Q Q1 2025).

Competitive Dynamics

The memory interface market requires multiple suppliers for ecosystem security, typically three suppliers, which limits Rambus's maximum achievable market share (Rambus Inc. Presents at Bank of America Global Technology Conference 2025, Jun-05-2025 08:40 AM). Primary competitors include Montage (a Chinese company) and Renesas, creating ongoing competitive pressure (Rambus Inc. Presents at Bank of America Global Technology Conference 2025, Jun-05-2025 08:40 AM).

Geopolitical and Trade Considerations

While Rambus has limited direct exposure to China (low single-digit percentage of business), the company monitors potential indirect impacts from tariffs and trade restrictions (Rambus Inc. Presents at Bank of America Global Technology Conference 2025, Jun-05-2025 08:40 AM). The company's front-end supply chain is in Taiwan, with back-end supply chain in Taiwan and Korea, providing some geographic diversification (Rambus Inc. Presents at Bank of America Global Technology Conference 2025, Jun-05-2025 08:40 AM).

Forward Guidance and Outlook

Q2 2025 Guidance

Management provided Q2 2025 guidance indicating continued strong performance across key metrics. Product revenue is expected to range from $77-83 million, suggesting sustained momentum in the Memory Interface Chips business (RMBS 8-K 04/28/25 Earnings Release). Licensing billings are projected at $64-70 million, while total operating costs are guided to $90-94 million on a non-GAAP basis, demonstrating disciplined expense management while investing in growth initiatives (RMBS 8-K 04/28/25 Earnings Release).

Long-Term Margin Targets

The company maintains a long-term target for product gross margin of 60% to 65%, with management noting that "If you look over the last sort of 3 years' annual performance, we've been operating at 61% to 65%" (Rambus Inc. Presents at Bank of America Global Technology Conference 2025, Jun-05-2025 08:40 AM). This margin profile reflects the company's focus on high-value, differentiated products rather than commodity semiconductors.

Investment Thesis Summary

Rambus Inc. presents a compelling investment opportunity within the AI and data center semiconductor ecosystem based on several key factors:

Financial Excellence: The company demonstrates exceptional financial performance with 41% revenue growth, 75% operating income growth, and 98% operating cash flow growth in Q1 2025, supported by a debt-free balance sheet with over $500 million in cash.

Strategic Market Position: With over 75% of chip and silicon IP revenue from data center and AI applications, Rambus maintains market leadership in essential memory interface components with >40% DDR5 market share and a $1.35 billion addressable market opportunity.

Diversified Business Model: The combination of growing product revenue (52% growth), stable licensing income ($210 million annually at 100% margins), and expanding silicon IP business ($120 million growing 10-15% annually) provides both growth and stability.

Innovation Leadership: A 2,700+ patent portfolio, 35 years of semiconductor experience, and engineering-focused organization (70% of employees) support continued technological leadership and competitive moats.

Growth Catalysts: Multiple product expansion opportunities including companion chips, MRDIMM chipsets, client clock drivers, and HBM4 controllers provide diversified growth vectors beyond the core RCD business.

The company's positioning at the intersection of AI infrastructure demand and memory performance requirements, combined with strong financial execution and technological leadership, makes Rambus a compelling investment consideration among AI and data center semiconductor opportunities.

To further research Marvell, Rambus, or any other company, head over to Portrait today!